My Path as a Techie to Learning About Equity

Photo Credit: Getty Images

Author: Jonathan Rotner

A couple years ago, I and a small team of researchers published a website and a paper called, “AI Fails and How We Can Learn from Them.” There are a couple stories that really stayed with me:

- A psychiatrist realized that Facebook’s “people you may know” algorithm was recommending her patients to each other as potential “friends,” since they were all visiting the same location.

- An AI for allocating healthcare services offered more care to White patients than to equally sick Black patients. The algorithm, using real data patterns, was programmed to drive down costs; and since more money is traditionally spent on White patients than Black patients with the same level of need, those are the patients who received care.1

As an electrical engineer, my first reaction is that these are engineering problems that can be analyzed and fixed.

However, they’re actually issues of Equity.

The ‘people you may know’ example reminded me that there are always unintended consequences that can result in real harm. The healthcare AI emphasized to me the danger of relying on existing data that purports to be objective yet stems from a history of systemic injustice, as well as the difficulty of trying to help a variety of people who have very different needs.

There was also a third story that stayed with me, that left me with a very different takeaway.

- Researchers asked a group of male, domestic violence offenders to step into a virtual reality experience from the point of view of a domestic abuse victim. The virtual simulation placed the man in a life-sized female body. The results were real – the offenders’ ability to recognize their counterparts’ emotions improved, potentially leading to more empathy and less violence.

This example showed me that when a technology is specifically designed to help those in need, and it’s driven by the input and goals of those in need, the outcomes can be equitable and extraordinary.

From Equity experts, I learned that “Equity” is the elimination of disproportion and disparity. Equity occurs when outcomes are not predictable based on the characteristics of an individual or group. Equity isn’t the same as “Equality” – Equality assumes we all have the same needs and goals; Equity directs us to understand the specific obstacles that each of us face and leverage the specific resources each of possess. Equity also isn’t the same as “Fairness” – Fairness is an ideal, a philosophy that guides us towards pursuing good outcomes; Equity doesn’t care which philosophy is followed, instead it emphasizes that goodness must be measured by impact, not intent.

For me, as an engineer who majored in electrical engineering, got a Master’s degree in circuit design, worked on 3D-printing prototyping and artificial intelligence research projects for the national defense sector, none of my experiences prepared me or trained me to consider Equity, nor did they equip me with tools to design for Equity. I needed to practice designing for equity to understand it, let alone get better at it.

More and more issues of Equity, like the ones above, are coming to light in advanced technology. They’re not simple engineering problems, but examples of hidden biases and limited perspectives in data and algorithm creation. Just as engineers and developers learn fundamental algorithms for efficient use of computer resources, study theories of computability and compiler design, and practice by developing programs in different computer languages, so do we have to start training on Equity. That means learning how to view technologies as part of a complex ecosystem that interacts with and influences human behavior, decision making, preferences, strategies, and ways of life in beneficial, and sometimes less beneficial, ways. Once we understand how Equity is important, we will incorporate it into technological design.

That’s why I’m working to develop an “Equity for Techies” workbook.

—

I created an “Equity for Techies” workbook because I wanted to make Equity seem less foreign to my fellow Techies. I pulled from established design techniques and some remarkable authors 2 to inform this workbook. The goal of the workbook is to help Techie teams define and redefine success for the project by linking success criteria to equitable outcomes.

It starts with a way to understand Equity, and a way to understand how to work towards equitable outcomes.

Part 1 introduces patterns of technological harm, called technology risk zones, 3 which include:

- Surveillance

- Disinformation

- Exclusion

- Algorithmic bias

- Addiction

- Data control and ownership

- Bad actors

- Outsized power

This section asks the reader to assess which zones are most applicable to their project and what groups of people might be at greater risk. The team can walk away with a sense of which populations might be at particular risk from the results of your effort and a greater appreciation of outcomes to avoid.

Part 2 flips the script and asks the team to imagine alternatives that place Equity at the core of the effort. For every technology risk zone there is an equitable opportunity:

- Protect privacy

- Promote truth

- Broaden access

- Judge by algorithmic impact

- Inspire healthy behaviors

- Increase data ownership

- Encourage civility

- Facilitate choice

This section asks the reader to explore equitable opportunities specifically for the groups at particular risk, as identified in the previous section. The team can walk away with a prioritized list of group(s) who can benefit from your efforts, as well as ideas of the types of services or resources that might benefit members of those groups.

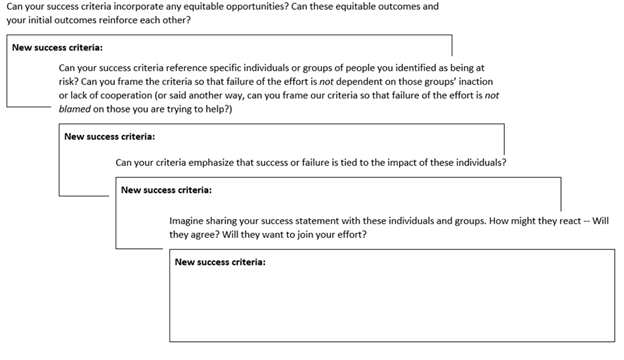

Part 3 establishes guideposts to help the team revisit success criteria. The team is invited through prompts, which draw on principles of equity and on previous exercises, to create new success criteria. The prompts are captured below.

The final exercise prompts action. It offers many examples of steps that help a team act on their thinking. Many of the examples point to other great tools that MITRE’s Social justice Platform and Innovation Toolkit initiatives have put together, including tools that incorporate an Equity lens into business innovation; a framework for assessing Equity in federal programs and policy; and Equity indices, models, and indicator libraries. After completing this section, the team can walk away with an actionable plan that specifies who is doing what by when to assure successful, equitable outcomes.

—

Changing our habits and patterns is hard, regardless of topic. Changing our thinking and actions around Equity is really hard. This takes practice. Humans learn by repetition. I repeat, humans learn by repetition.

I’m trying to get better at understanding Equity and incorporating its principles into my work. Right now, the ‘Equity for Techies’ workbook is in draft form, and I’m looking for teams (inside and outside of MITRE) that want to test it out on their projects, so we can continue to make it clearer and more useful. Please reach out if you’d like to participate and learn more!

In the meantime, let’s keep learning, keep trying, keep checking in with others to see how we’re doing. Engaging in this topic isn’t quick and it isn’t easy. But it’s important, because as Techies our work affects others, whether we’re aware of them or not, whether we intend to or not. Working on this together will help us all practice and get better. Share your successes, share your stumbles, share your stories, and you’re always welcome to reach out to me at jrotner@mitre.org.

Jonathan Rotner is a human-centered technologist who helps program managers, algorithm developers, and operators appreciate technology’s impact on human behavior. He works to increase communication and trust in an automated process.

A special thank you to Howard Gershen for his work on making this a better piece.

References

[1] S. Mullainathan, “Biased algorithms are easier to fix than biased people,” New York Times, Dec. 6, 2019. [Online]. Available: https://www.nytimes.com/2019/12/06/business/algorithm-bias-fix.html?searchResultPosition=1

And Z. Obermeyer, B. Powers, C. Vogeli, and S. Mullainathan, “Dissecting racial bias in an algorithm used to manage the health of populations,” Science, vol. 366, no. 6464, pp. 447-453, Oct. 25, 2019. [Online]. Available: https://science.sciencemag.org/content/366/6464/447

- Dr. Christine Marie Ortiz Guzman, Equity meets design,

- The Ethical Explorer guide and Ethical OS, a partnership between the Institute of the Future, a think tank, and the Tech and Society Solutions Lab, a former initiative from the impact investment firm Omidyar Network. Available at https://ethicalexplorer.org/ and https://ethicalos.org/

- The Creative Reaction Lab, specifically their webinar series. https://www.creativereactionlab.com/

- MITRE’s Innovation Toolkit. Available at: https://itk.mitre.org/

- MITRE’s Framework for Assessing Equity in Federal Programs and Policies. Available at: https://www.mitre.org/publications/technical-papers/a-framework-for-assessing-equity-in-federal-programs-and-policy

I am very grateful to build off their work.

[3] These come from the Ethical Explorer guide.

© 2022 The MITRE Corporation. All rights reserved. Approved for public release. Distribution unlimited. Case number 22-1400

MITRE’s mission-driven team is dedicated to solving problems for a safer world. Learn more about MITRE.

See also:

Project Demodocus: Bringing Accessibility to the Masses

The Power of Geospatial Data In Developing Countries

Organizational Inclusion and Diversity, with Paulette Huckstep

The Power of Civic Time with Jenn Richkus and Jon Desenberg

What You Need to Know About Post Quantum Crypto, With Perry Loveridge

Uniting to Fight the Global Water Crisis

Energy Masters: Improving the Water and Energy Efficiency of Under-Invested Communities

Interview with Julie McEwen on why privacy is key

Will Quantum Computers Revolutionize My Daily Life? Not in the Ways You Might Think

MOCHA is Way More Than a Drink

Safeguarding Our Smart Ports: Lessons from Djibouti with Tamara Ambrosio-Hemphill

Sustainable Diet, Sustainable World: Community Supported Agriculture Helps Make Both Happen

Dr. David E. Willmes on Solving Global Food Insecurity

The Road to Resilient, Sustainable Infrastructure is a Smart One

A Story of the Game-Changing Value of Tacit Knowledge: “Is There a Doctor in the House?”

MITRE’s Summer of AI: Making Human-to-Machine Conversations Smarter with Artificial Intelligence