Mist Computing: Everything Computing Everywhere

Credit: The Opte Project, CC BY 2.5, via Wikimedia Commons

Author: Dr. Qiang Lin

One of the key metrics for measuring how fast a computer performs is through floating-point operations (i.e., any mathematical operation on two decimal numbers, such as +, -, *, /) per second. You would be amazed if you were to compare the Apollo 11 Guidance Computer (with a peak performance of a “whopping” 12,245 floating-point operations per second) with today’s iPhone 12’s 16-core Apple Neural Engine (capable of 11 trillion floating-point operations per second).

As computing technology has advanced since the 1950s, computing paradigms have evolved from the mainframe, to the supercomputer, to the client-server, to the cloud computing, and to the edge computing of today. With miniaturization, easier access, and the lower cost of Central Processing Units (CPUs) and Graphical Processing Units (GPUs) as well as other computer components, computing has gone through an interesting cycle of centralization (i.e., the mainframe and supercomputer), to decentralization (i.e., the client-server), back to centralization (i.e., the cloud computing), and, finally, back to decentralization (i.e., edge computing).

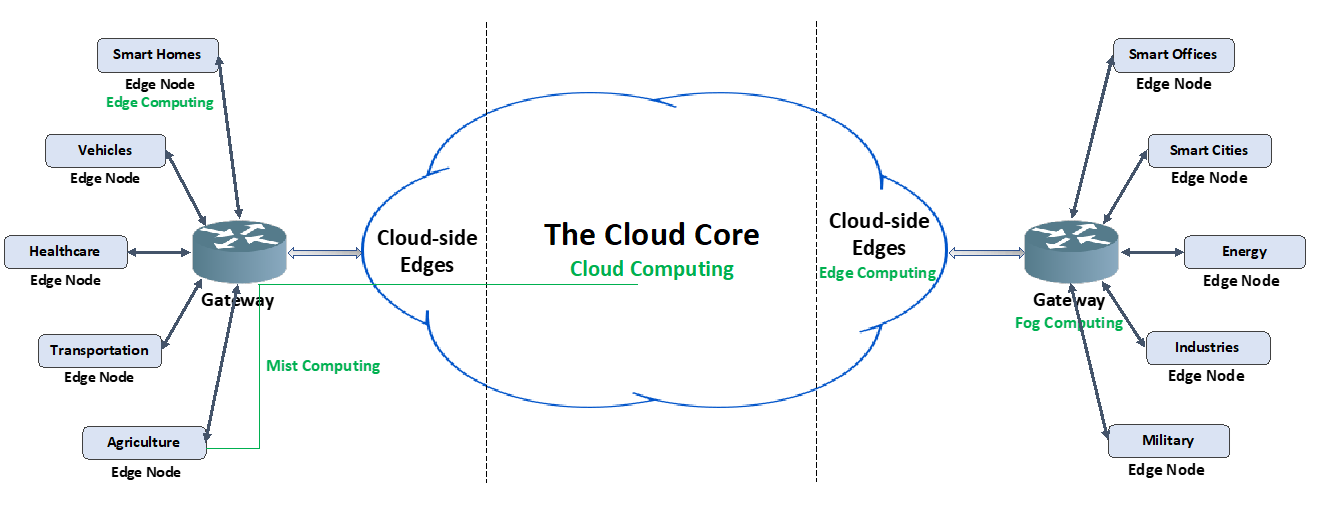

The term ubiquitous computing was coined by Xerox Mark Weiser in 1988 to describe a future when personal computers would be replaced with so-called invisible computers (i.e., no human interfaces) embedded in everyday objects (i.e., the Internet of Things, or IoT). With the ever-increasing deployment of IoT, including smart phones, wearable devices, and connected vehicles, and better accessibility of 5G mobile, computing has become more pervasive than ever before. We are getting close to the ubiquitous computing Weiser envisioned by combining four types of computing: cloud, fog, edge, and mist. To better explain this concept, Figure 1 illustrates these four types that we have encountered or will encounter.

Figure 1 – Cloud, Fog, Edge, and Mist Computing

Cloud Computing

With IoT and cloud computing, we basically push and process all sensory data and other data into the cloud, which humans then access through Web browsers. A data ingestion process in the cloud obtains and imports the data generated from the IoT applications and stores it in a data lake (i.e., really large storage capacity in the cloud). It then applies big data parallel processing over it, which could be via Spark, Flink, Hive, or another tool, and consumes this fast-paced information to make decisions (e.g., fraud-detection, anomaly detection, and ad-hoc analysis of live data). There are only a few well-known cloud computing platforms commercially available: Amazon Web Services (AWS), Microsoft Azure, Google Cloud, IBM Cloud, and Oracle Cloud Infrastructure.

Fog Computing

IoT applications generate an unprecedented volume and variety of data. But by the time the data make their way to the cloud for analysis, the opportunity to act on them might be gone. For example, autonomy and the cloud do not go well together. When you are in a moving vehicle, and this vehicle has to rely on the cloud to compute its essential functions, what happens if you lose connectivity to the cloud or there is a very long delay from the communication? A lag in a moving vehicle scenario is a matter of life and death. IoT speeds up awareness and response to events.

In industries such as manufacturing, oil and gas, utilities, transportation, mining, and the public sector, faster response time can improve output, boost service levels, and increase safety. For example, oil companies are exploring the use of connected sensor data and local computing other than the cloud for safety-focused use cases, like the real-time tracking of worksite-safety conditions on oil rigs, to better mitigate emergencies.

These IoT applications will challenge cloud computing in the following ways:

- Bandwidth limitation: Capitalizing on IoT requires a new kind of infrastructure. Today’s cloud models are not designed for the volume, variety, and velocity of data that IoT generates. Billions of previously unconnected devices are generating more than two exabytes of data each day. Billions more “things” will be connected to the internet each year. Moving all data from these things to the cloud for analysis would require vast amounts of bandwidth.

- Long latency: Milliseconds matter when engineers are trying to prevent manufacturing line shutdowns or restore electrical service. Analyzing data close to the devices that collected the data can make the difference between averting disaster and a cascading system failure.

Fog computing, introduced by Cisco, addresses the timeliness of the IoT data, by:

- Analyzing the most time-sensitive data at the network edge – on a gateway (or a router) – no human interfaces in general (although network engineers can access them), close to where the data is generated, instead of sending vast amounts of the IoT data to the cloud.

- Acting on the IoT data in milliseconds, based on the data latency policy (e.g., states that latency-sensitive data is to be analyzed on fog nodes, not sending it to the cloud).

- Sending selected data to the cloud for historical analysis and longer-term storage.

Edge Computing

Consider that a self-driving car is effectively a data center on wheels, a drone is a data center with wings, a robot is a data center with arms and legs, a boat is a floating data center, and so on. Each of these IoT devices is called an edge node, and each has ample embedded computing power without any human interfaces. There are about 100 CPUs on a circuit board embedded inside a luxury automobile today, and we are not talking about a self-driving car. Then, when we think about connecting thousands of cars together, we are looking at a massive, distributed computing system at the edge of the network. Stan Dmitriev at Tuxera says a car with L2 autonomy can generate data at the rate of about 25GB/hour, and these are real-time, real-world data about our environment—vision, location, acceleration, temperature, gravity information, or other factors. Real-time data processing must occur at the edge node where real-world information is being collected.

For example, when a connected car sees an image of a stop sign, it processes the data, slows down, and stops. It may not even have enough time to transmit the image of the stop sign to a gateway performing the fog computing and receive the commands back for the car to slow down and stop, especially if visibility is poor due to rain or snow or if it’s nighttime. Edge computing drives the intelligence, processing power, and communication operations of a gateway directly into the IoT devices themselves.

As more and more edge nodes are being deployed and connected online, cloud computing vendors are beginning to provide special edge computing capability within the cloud. (i.e., the cloud-side edge depicted in Figure 1), such as AWS IoT Greengrass and Microsoft Azure IoT Edge for better performance and faster response although not helping on the bandwidth limitation.

Mist Computing

The mist nodes are on the edge, in the fog, and in the cloud, all working in concert as a single decentralized and distributed mesh computer—that is, the mist (see the green line of mist computing in Figure 1). Mist computing, without human interfaces, manages routing computations to the right node, at the right time, in the right location, typically as close to the source of the data as possible. For that reason, mist computing is sometimes called Everything Computing Everywhere, which is close to ubiquitous computing.

Consider running a network of computer vision cameras with real-time Machine Learning (ML) processing on hardware. The network could use the mist to distribute live video streams to other camera mist nodes on a private mesh computer. The mist nodes with static video frames could help other mist nodes with moving frames compute ML algorithms looking for person counts, activities, weapons, etc. If a weapon is detected, for instance, the mist could start forwarding frames to the mist nodes in the cloud where facial recognition databases are present to identify suspects in the live video stream. The cloud nodes could also start dispatching security and police as well as notifying people about the present threat of danger.

Many of the edge nodes, such as cell phones, connected cars, and smart home devices, are becoming a part of mist computing, where data is processed at the furthest reaches of the edge. This concept of mist computing is fundamental because we are in an era of unprecedented and growing connectivity.

MITRE has been at the forefront of computing technology research and works as a trusted advisor to Federal agencies and enterprises. A MITRE research team was awarded two patents from the U.S. Patent Office in July 2019: “Cross-cloud Orchestration of Data Analytics for a Plurality of Research Domains” and “Cross-cloud Orchestration of Data Analytics.” MITRE also helps our sponsors weigh the pros and cons when it comes to moving to the cloud and any newer computing paradigms when they become available.

Qiang Lin is an Information Systems Engineer at MITRE and Adjunct Faculty in the Department of Electrical and Computer Engineering at George Mason University. He worked at Deloitte and SAIC previously and has a PhD in Computer Engineering from West Virginia University.

© 2021 The MITRE Corporation. All rights reserved. Approved for public release. Distribution unlimited. Case number 21-0144

MITRE’s mission-driven team is dedicated to solving problems for a safer world. Learn more about MITRE.

See also:

Internet of Things Security: Challenges and Solutions

5G: How it Works and What it Brings

Will Quantum Computers Revolutionize My Daily Life? Not in the Ways You Might Think

The Person at the Other End of the Data

A Social Scientist Examines the Role of Technology in our Lives

Building Smarter Machines by Getting Smarter About the Brain

Marcie Zaharee and MITRE’s Open Innovation Challenge

Another Update on the Aviation Industry with Michael Wells and Bob Brents

Interview with Dan Ward, Debra Zides, and Lorna Tedder on streamlining acquisitions

Consequences and Tradeoffs—Dependability in Artificial Intelligence and Autonomy

fleetForecaster Keeps U.S. Aviation System a Step Ahead