Challenges in Autonomy

Photo by Jeremy Bishop on Unsplash

Author: Amanda Andrei

Autonomy is a broad and complex topic, overlapping with artificial intelligence, unmanned systems, and human domains, with each domain needing to leverage systems engineering concepts to make sense of and use new technologies and systems. From the multiple challenges in this topic, senior autonomous intelligent systems engineer Dr. Carrie Rebhuhn identifies three major challenges in the broader adoption of autonomy, for both humans and robots.

Operating in Non-Lab Conditions

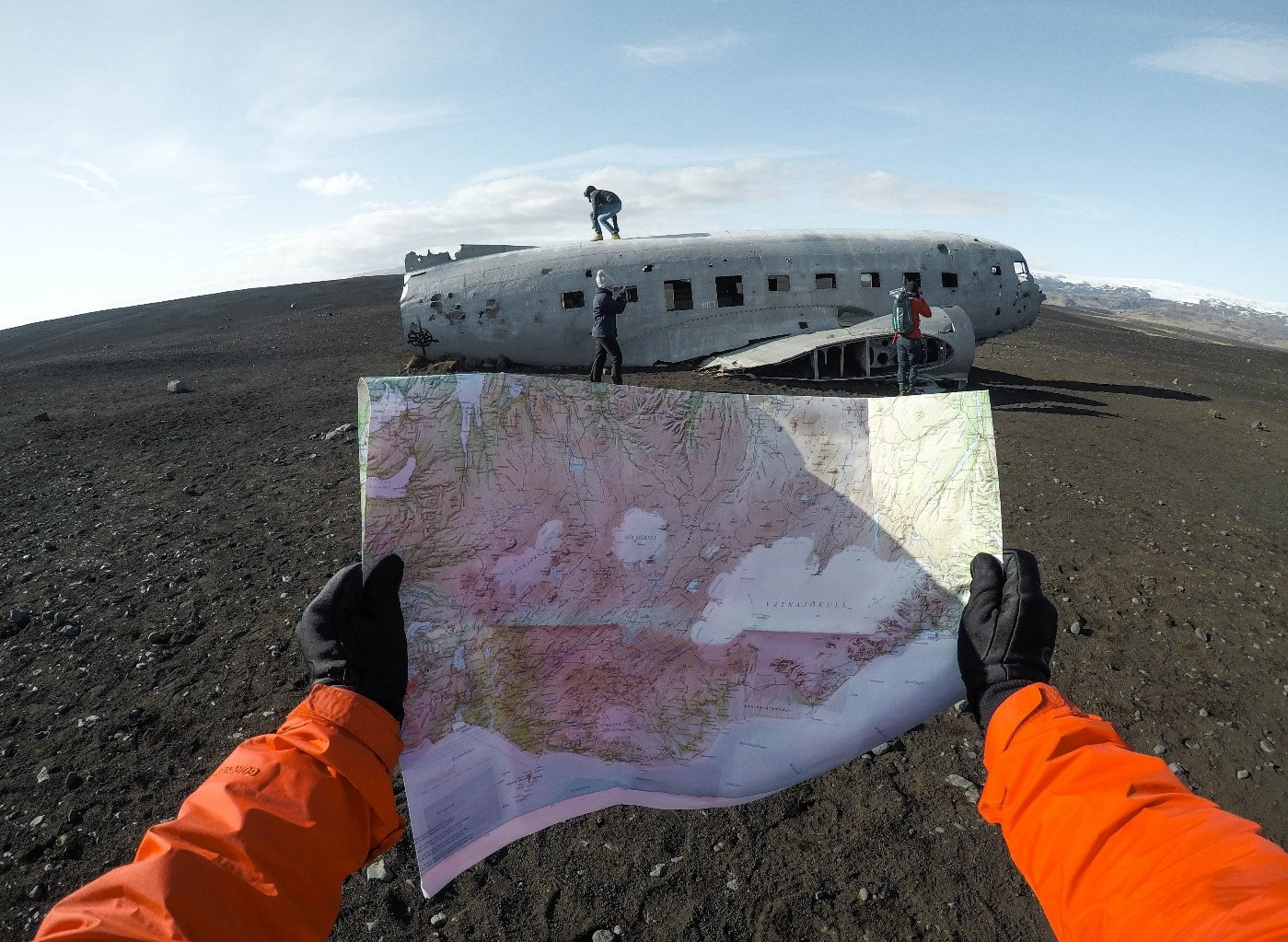

This challenge applies especially to autonomy in motion. Much of the testing for robots and physical systems is conducted in carefully monitored conditions, where the engineers know everything about the robot and its environment. One of Rebhuhn’s projects involves a robot navigating through an area without GPS, making it tricky for it to figure out where it is, which terrain is a legitimate place to go to, and how to accomplish its goal in that area—all with restricted sensors.

“In the lab, you have this omniscient view of the universe,” Rebhuhn explains. “If you take a robot outside of a laboratory, a lot of times things are completely different—different lighting, terrain, and often there are lots of people outside, so you don’t necessarily have control over every aspect of your environment.”

Testing in non-lab conditions can be dangerous, even deadly. In March 2018, an autonomous vehicle operated by Uber struck and killed a 49-year-old woman—an incident which is believed to be the first fatality from testing autonomous vehicles. Some critics suggest that though autonomous vehicles might be safer, the future is a “legal minefield” and questions remain about “the price of progress.”

Therein lies the paradox. Real world tests are essential to discovering the gaps in our controlled testing environments. However, risk management is crucial for the continuing development of robotics. Many testing errors often predicate on human assumptions about how an autonomous system functions. Thus, it is an open question for engineers, designers, and companies: how do we manage perceptions and beliefs about autonomy in our artificial intelligence systems and machines?

Human Interaction Side of Autonomy

As mentioned in the previous blog post, autonomy raises issues about morality and human expectations of a robot’s abilities to serve humans. Rebhuhn sees two sides to this issue: “There’s trying to get robots to better understand what humans want from them, but also getting humans to understand how the robot is making its decision.”

For instance, as autonomous systems are constantly learning and adapting, their behaviors will change over time – which could lead to surprises during operations. The Defense Science Board’s Summer Study on Autonomy provides several ways to respond to this challenge, including:

- Providing the human operator with a system’s training history

- Rehearsing missions with human and machine teams

- Designing systems with “self-awareness” (an ability to assess its internal system health and external environment and context) that it can provide to machines or humans as needed

Trust and trustworthiness between humans and machines play a critical role here. There are multiple and complex dimensions that are involved with trust, such as competence, reliability, common sense, and non-deception. Trustworthiness of a system arises from defining a set of those trust attributes and observing the behaviors of the system in operation. Establishing trustworthiness is best done when designing the system—and then it still needs to be tested and evaluated when operating and in the field.

Getting Robots to Cooperate with Other Robots

Another of Rebhuhn’s projects involves swarms—large numbers of unmanned aerial vehicles (UAV) interacting with each other. The goal is to have them move together as a unit, ensuring that they have paths that do not conflict with each other, while making sure the operator does not need to micromanage the entire group of machines.

Self-organization is a hard problem, though. Robots don’t have the social intuition that humans use when cooperating with crowds of other humans. “Robots are inherently self-interested,” Rebhuhn says, “A lot of multi-agent systems research is getting emergent behavior that appears altruistic to us, but is actually the robot optimizing an objective function that has been specifically tailored for it to seem to be cooperating with others.”

Swarm robotics takes its inspiration from real swarms in nature: colonies of ants, flocks of birds, and schools of fish. Each robot follows an optimization with limited information, and the emergent behavior of the group appears to cooperate in accomplishing its goal. The tasks that can be performed with this method are quite diverse—multiagent techniques have applications ranging from search and rescue to air traffic control. For her doctoral research, Rebhuhn used a group of intelligent agents to improve emergent autonomous traffic flow.

These are only a few of the challenges in the huge, complex, and interdisciplinary space of autonomy. MITRE is taking on these challenges and more by focusing on certain areas such as human-machine teaming, high-assurance machine learning, and integration and adoption of autonomy.

Amanda Andrei is a computational social scientist in the Department of Cognitive Sciences and Artificial Intelligence. She specializes in social media analysis, designing innovative spaces, and writing articles on cool subjects.

See also:

Applications in Data Science: A Serious Game for Individuals with Cerebral Palsy

Applications in Data Science: Anti-Fragility in Action

Data Science Practitioners

Technical Challenges in Data Science

Defining, Applying, and Coordinating Data Science at MITRE

Rising to the Challenge: Countering Unauthorized Unmanned Aircraft Systems

Mistakes and Transcendent Paradoxes: Dr. Peter Senge Talks on Cultivating Learning Organizations

MITRE Hackathon Examines Impact of Emerging Mobile Technologies

Digital Notebooks for Emergency First Responders

Managing Knowledge Through Systems Visioneering

© 2018 The MITRE Corporation. All rights reserved. Approved for public release. Distribution unlimited. Case number 18-1338

Solving problems for a safer world. The MITRE Corporation is a not-for-profit organization that operates research and development centers sponsored by the federal government. Learn more about MITRE.