Rest, Motion, and Morality: Introduction to Autonomy

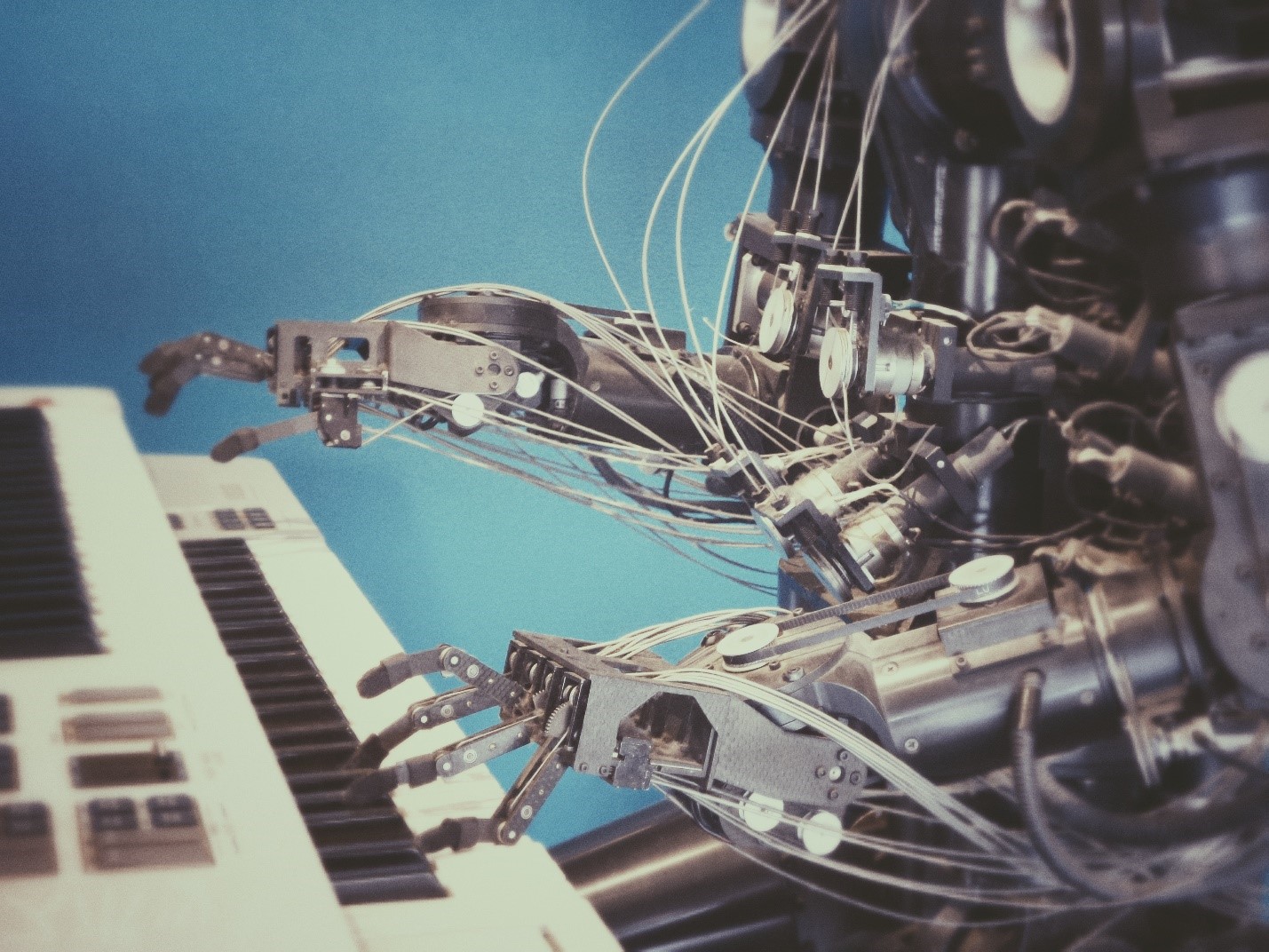

Photo by Franck Veschi on Unsplash

Author: Amanda Andrei

When you hear the word autonomy, it tends to be in the context of autonomous vehicles – self-driving cars that take passengers wherever they need to go without the need for human input. But autonomy extends to other entities: robots, unmanned aerial vehicles (UAVs), and artificial intelligence (AI) embedded into an expert advisory system, such as air traffic control. The concept of autonomy lives in most of the technology around us and becomes increasingly important as people place more and more trust in the decisions of these machines and embedded systems.

Autonomy at Rest, Autonomy in Motion

The Defense Science Board’s Summer Study on Autonomy categorized intelligent systems in two ways: those that use autonomy at rest and those that use autonomy in motion.

Autonomy at rest refers to systems that operate virtually, typically in a software-only environment. These include planning and expert systems, natural language processing, and image classification systems – automatic methods of assisting someone with a decision or finding an optimal area in a search space. You’ll find autonomy at rest operating in areas of data compilation, data analysis, web search, recommendation engines, and forecasting – so everywhere from what you search on Google to what book Amazon recommends you to next.

Autonomy in motion refers more to systems in the physical world, such as robots and autonomous vehicles. When these objects are in motion, decisions are made that are applied directly to the physical environment, thereby taking into account factors such as weather, amount of light, terrain, presence of people, and more. For instance, an autonomous vehicle needs to sense rain or snow, night or day, highway or dirt road, urban or rural environment for it to drive safely and effectively.

Right now, most systems are operated remotely instead of being autonomous. But as MITRE senior autonomous intelligent systems engineer Dr. Carrie Rebhuhn points out, “Humans can be really bad at handling large amounts of data – that’s the main reason we really need autonomy. For example, if we look at a system controlling multiple robots at once, there’s a lot of data coming from each of those robots that needs to be responded to quickly! You don’t necessarily want a human dictating every step in the path a robot will take in its environment.”

Autonomy and Perceptions of Morality

But if a human does not dictate or guide every step in an autonomous system’s decision-making process, what does this mean for behaviors, social norms, and eventually… morality? When do an autonomous system’s actions have social and moral consequences?

In December 2017, MITRE’s Innovation Brown Bag series invited Dr. Bertram F. Malle, Professor of Psychology in the Department of Cognitive, Linguistic and Psychological Sciences at Brown University, to give a talk on People’s Perceptions of Autonomous Systems as Moral Agents. Malle presented several case studies about how people respond to morally significant decisions made by robots, artificial intelligence, and other autonomous agents.

What is socially and morally significant? For the purposes of the presentation, Malle answered this question with the working definition of “behaviors that conform to or violate a social or moral norm.” For example, Americans would assume that a humanoid robot interacting with them would look directly into their eyes – but in some Asian cultures, this action could be interpreted as rude or insulting. Or consider a nursing assistant robot that monitors a person’s pain level and is asked by the patient to increase the dosage beyond what the doctor prescribed (and for some reason, the robot might not be able to reach the physician to move beyond this dilemma). What rule system within the robot can solve this problem? What norm can be broken?

Malle and his teams sought to find out how humans perceive the decisions that autonomous robots make – whether they apply moral norms or judgments to robots’ behavior. They presented human respondents with scenarios based on the trolley problem and asked questions such as “How much blame does the [repairman | robot] deserve for [switching | not switching] the train onto the side rail?”

The team found that people have different expectations for humans rather than robots – for instance, expecting that robots would take action over inaction and blaming robots less for acting and more for failing to act in the hypothetical life-and-death situations. (Interestingly enough, one third of the sample even dismissed the idea of robot moral agency in general, stating that robots are incapable of moral decisions in the first place.)

Malle also pointed out that autonomous and artificial intelligence systems that are uncritically learning, detecting patterns, and mimicking can lead to disastrous and unintentional consequences – such as insulting, prejudiced, or racist behaviors. By understanding how humans perceive these autonomous systems and by critically thinking about the social and moral norms a system might be trained upon, engineers and designers can develop ethically responsible agents.

There are even more challenges to designing, developing, and maintaining autonomous systems. Stay tuned for our next blog post, Challenges in Autonomy, to learn more.

Amanda Andrei is a computational social scientist in the Department of Cognitive Sciences and Artificial Intelligence. She specializes in social media analysis, designing innovative spaces, and writing articles on cool subjects.

See also:

Applications in Data Science: Anti-Fragility in Action

Applications in Data Science: A Serious Game for Individuals with Cerebral Palsy

Technical Challenges in Data Science

Defining, Applying, and Coordinating Data Science at MITRE

Rising to the Challenge: Countering Unauthorized Unmanned Aircraft Systems

Mistakes and Transcendent Paradoxes: Dr. Peter Senge Talks on Cultivating Learning Organizations

MITRE Hackathon Examines Impact of Emerging Mobile Technologies

Digital Notebooks for Emergency First Responders

Managing Knowledge Through Systems Visioneering

© 2018 The MITRE Corporation. All rights reserved. Approved for public release; distribution unlimited. Case number 18-1176

The MITRE Corporation is a not-for-profit organization that operates research and development centers sponsored by the federal government. Learn more about MITRE.

0 Comments